03. January 2026

Admin

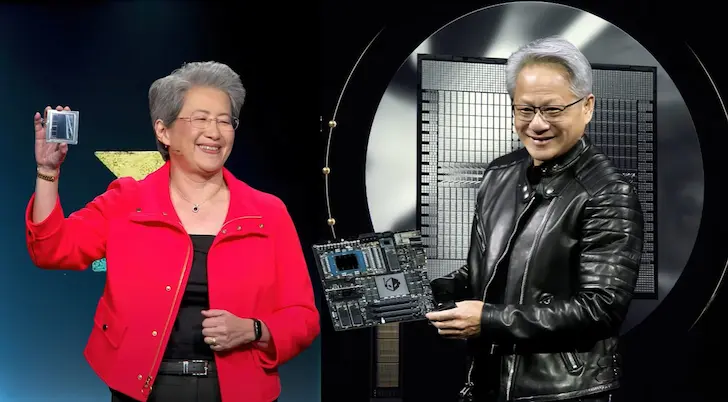

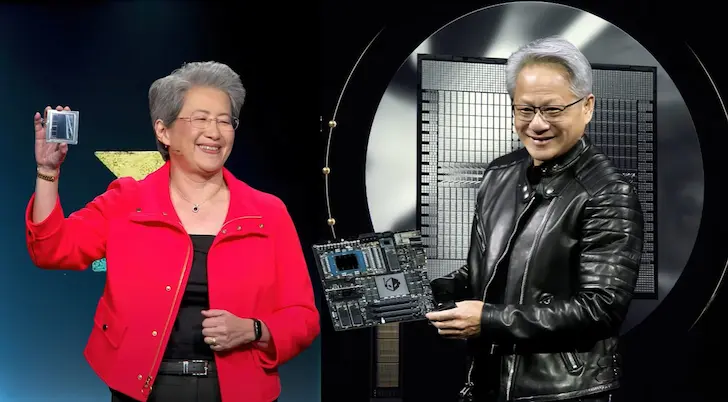

NVIDIA GB200 & NVL72 Racks Deliver Up to 28× Performance Over AMD MI355X

NVIDIA has unveiled details of its **GB200 GPU architecture** and the accompanying **NVL72 AI racks**, showing dramatic performance gains in large-scale AI workloads compared with competitor hardware like the AMD MI355X. These new systems are designed to accelerate generative AI, large language models, and other intensive machine learning tasks.

Quick Insight:

Early performance figures suggest that GB200-powered NVL72 racks can deliver **up to a 28× speed advantage** over AMD’s MI355X in certain AI training and inference benchmarks — a testament to NVIDIA’s continued leadership in AI compute hardware.

What Is NVIDIA’s GB200 Architecture?

• GB200 represents NVIDIA’s next generation of AI-optimized GPUs, built for maximum performance in large neural networks.

• It is tailored for both training and inference of advanced AI models, including large language models (LLMs).

• The architecture focuses on high throughput, enhanced memory bandwidth, and improved interconnect efficiency.

NVL72 Racks: Hardware Designed for AI Scale

• NVL72 refers to a complete server rack configuration that integrates multiple GB200 GPUs with high-speed networking and cooling.

• These racks are intended for data centers and enterprise AI deployments where sustained throughput and efficiency are essential.

• The design aims to simplify large AI cluster deployment and reduce overhead for scaling AI workloads.

Performance Gains Over AMD MI355X

• Initial benchmarks indicate that GB200 in NVL72 racks can outperform AMD’s MI355X by **up to 28×** in select AI tasks.

• These gains come from architectural advantages, better software optimizations, and tighter integration with AI frameworks.

• Though exact results vary by workload type, the performance delta highlights NVIDIA’s edge in AI compute.

Why It Matters for AI Development

• Large language models and generative AI systems require enormous compute resources to train efficiently.

• Higher throughput and performance can significantly reduce training times and infrastructure costs.

• Organizations building advanced AI applications stand to benefit from accelerated hardware.

Final Thoughts

NVIDIA’s GB200 GPUs and NVL72 rack systems represent a major step forward in AI compute performance, particularly when compared with alternative offerings like the AMD MI355X. For enterprises and research institutions tackling massive AI workloads, these platforms promise dramatically faster results and greater efficiency — potentially shaping the next wave of generative AI development.