NVIDIA Launches BlueField-4: The Processor Powering the Operating System of AI Factories

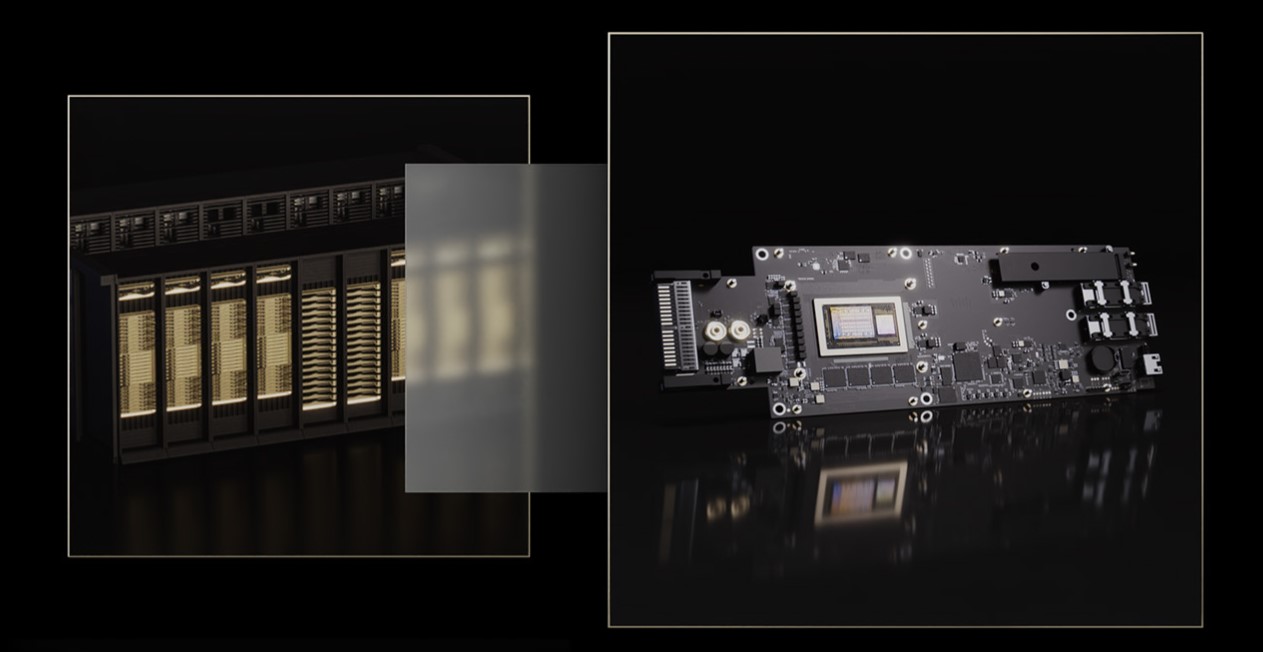

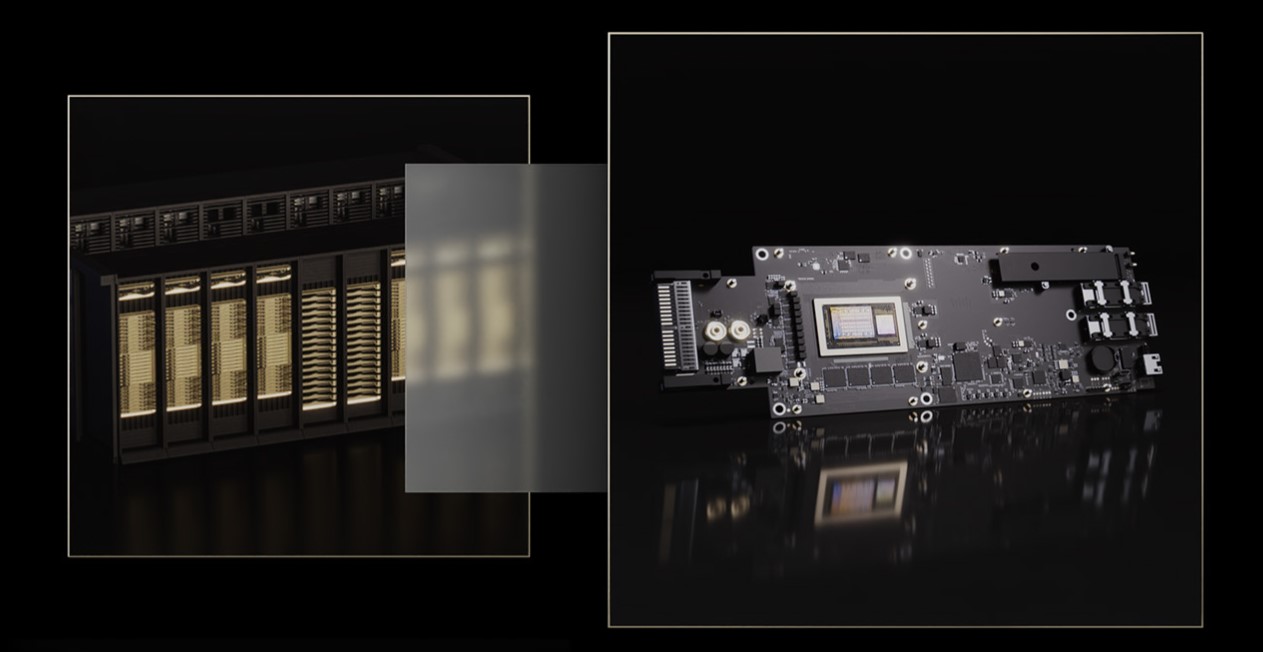

NVIDIA has unveiled the new BlueField-4 data-processing unit (DPU) platform, designed to drive the next generation of AI factories by delivering huge improvements in computing, networking and security infrastructure.

Quick Insight: BlueField-4 brings approximately six times the compute power of its predecessor and supports up to 800 Gb/s of network throughput — empowering large-scale AI infrastructure across data, networking and security domains.

1. Key Features

• Integration of an NVIDIA Grace CPU with ConnectX-9 networking for high-throughput performance.

• Multi-tenant networking, container-based microservices via NVIDIA DOCA, and hardware-enforced security for modern AI deployments.

• Purpose-built for “AI factory” environments — where data storage, networking and compute converge at massive scale.

2. Who It’s For

• Hyperscale cloud providers, AI training and inference platforms, and large enterprise data centers scaling AI workloads.

• Organizations needing high-performance, secure, multi-tenant AI infrastructure handling structured, unstructured and emerging AI-native data.

• Infrastructure designers looking to offload data-plane functions (networking, storage, security) from host CPUs and scale more efficiently.

3. Why It Matters

• With AI workloads growing rapidly (e.g., trillion-token models), infrastructure bottlenecks are becoming a major constraint — BlueField-4 addresses this head-on.

• By offloading key infrastructure functions to the DPU, host CPUs are freed to focus on AI-related compute, improving overall system efficiency.

• Enhanced security and isolation built into the platform mean multi-tenant AI deployments can scale safely in regulated environments.

Final Thoughts

The launch of BlueField-4 signals a shift in how AI infrastructure is architected — from simply increasing GPU count towards rethinking how data, networking and security integrate. For organizations poised to scale AI at gigascale, this platform offers a foundation built for performance, throughput and trust.

If you’re planning large-scale AI deployments, now is an ideal time to evaluate whether your infrastructure path aligns with this emerging model.

Tip: When designing infrastructure for AI workloads, ensure your networking, storage, and security fabrics are not afterthoughts — they should be integral to the architecture, as this platform demonstrates.